Dr.-Ing. Peter Fürsattel

Alumnus of the Pattern Recognition Lab of the Friedrich-Alexander-Universität Erlangen-Nürnberg

- Decomposition of 3D point clouds

- Range camera applications

- Intrinsic calibration of RGBD and ToF cameras

- Range camera calibration and error modeling

- Camera to X calibration

Calibration and Applications for Structured Light Cameras

Calibration of SL-Cameras and Geometric Primitve Refinement

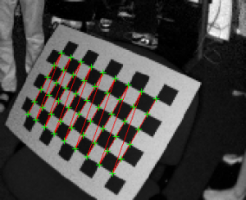

Structured-light cameras calculate 3-D information from disparity values. Typically, only discrete disparity values are used to reduce the computational complexity of stereo matching. This discretization also causes a discretization of the 3-D data and consequently limits the accuracy of an application.

This limitation can be circumvented if prior information on the scene, e.g. a parametric model, is available. In the course of this project a new calibration method for off-the-shelf structured light cameras has been developed. Furthermore, a new method is proposed that uses the custom camera model to iteratively refine the parameters of initial estimates of parametric models.

The example shown in the right illustrates the refinement of a cuboid. For more details please download the paper.

Decomposition of 3D Point Clouds and Parametric Model Fitting

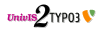

3D-cameras, like structured light or time-of-flight sensors, capture point clouds of the environment at high frame rates. For analyzing the content of the scene efficiently it can be beneficial to decompose the point cloud into segments which can be represented by simple models, e.g. planes, spheres, cuboids. Challenges in this context are the efficient search for these models, obtaining the points which belong to each segment, the final accuracy of the models and how to keep the impact of sensor noise low.

In the example shown above, a simple scene is decomposed into several planar segments by analyzing the normals of the point cloud.

Ground Truth for Range Camera Evaluation

1. Error Analysis of Time-of-Flight Sensors

Time-of-flight (ToF) cameras suffer from systematic errors, which can be an issue in many application scenarios. In this study, the error characteristics of eight different ToF cameras are investigated, including the Kinect V2 and other recent ToF sensors. We present up to six experiments for each camera to quantify different types of errors: temperature related errors, temporal noise, errors related to the sensor's integration time, internal scattering effects, amplitude-related errors and non-linear distace errors.

The complete study, including a description of the experimental setups can be found in the paper.

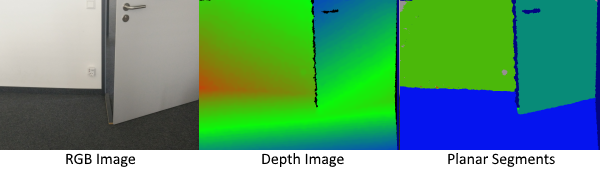

2. Laser Scanner to Camera Calibration

Evaluating the accuracy of a 3-D computer vision application or range cameras requires accurate reference data. Such data can be acquired with terrestrial laser scanners. Before the reference data can be compared to the data of the camera it is necessary align the coordinate systems of the two data sets. In the course of this project, a new automatic calibration method has been developed which calculates the unknown spatial transformation between a laser scanner and a 2-D camera based on a single calibration scene (see figure below). The method has been designed with particular focus on the generation of reference distance data for range camera evaluation, and aside from the calibration part, also allows the simple generation of reference distance images. In the example shown below, the spatial relation is estimated from the point cloud and the calibration image. This allows to generate virtual distance images of the point cloud as seen from the persepective of the camera.

The complete method and two range camera evaluations can be be found in the paper.

Intrinsic Calibration and Calibration Pattern Detection

1. OCPAD - Occluded Checkerboard Pattern Detector

OCPAD is a new checkerboard pattern detector based on ROCHADE. With this new method partially visible calibration patterns can be detected. This may be due to a pattern that is larger than the field of view, or because some object occludes some parts of the pattern. In short this detector allows you to:

- Find more checkerboard patterns than with other state of the art methods

- Get your calibration images faster

- Obtain more accurate calibrations due to better distributed point correspondences

The paper and code can be found at the OCPAD project website.

2. ROCHADE - Checkerboard Pattern Detector

The robust and reliable detection of calibration patterns is typically the first step when calibrating a new camera. Aside from intrinsic calibration such patterns can also be used for calibrating multi camera setups or RGBD sensors which sometime consist multiple sensors, e.g. structured light sensors.

ROCHADE is a checkerboard detection alogorithm which is designed to achieve a high detection rate at high accuracy especially with low-resolution cameras, like for example Time-of-Flight cameras. More information on the algorithm and detection performance can be found in the respective paper

A MATLAB® implementation of this algorithm and evaluation data can be downloaded freely. Download ROCHADE

+49 9131 85 27882

+49 9131 85 27882

+49 9131 85 27270

+49 9131 85 27270