Friedrich-Alexander-Universität Erlangen

Lehrstuhl für Mustererkennung

Martensstraße 3

91058 Erlangen

The idea behind Time-of-Flight endoscopy is to augment conventional endoscopic 2-D data with additional 3-D surface information. In collaboration with Richard Wolf GmbH, a first 2-D/3-D endoscope was developed recently. This prototype is capable of acquiring both 3-D distanceand 2-D photometric color measurements that are aligned using camera calibration. Combining these complementary data holds potentials for applications in minimally invasive procedures. Among others, potential applications include instrument detection, tracking or navigation assistance using augmented reality.

This project is in collaboration with the German Cancer Research Center(DKFZ) in Heidelberg and the Minimally invasive InterdisciplinaryTherapeutical Intervention (MITI) group of the Technical University (TU) Munich.

In minimally invasive surgery, hybrid 3-D endoscopy is an evolving field of research that aims to augment conventional video based systems by metric 3-D measurements. One crucial issue with these systems arises from specular reflections that result in overexposed RGB values and invalid range measurements. In this paper, we address this problem by registering video and range information acquired from different view points using a patch based approach. This allows to replace invalid measurements caused by specular reflections in one view with valid data from non-specular regions in the other view. In contrast to previous approaches that employ interpolation techniques our method utilizes actual scene information being advantageous in a medical environment. In our experiments, we show that our method decreases the mean absolute error in common situations on average by more than 30% compared to conventional interpolation. Moreover, for challenging scenarios we outperform interpolation by more than 1 mm and reconstruct important structures that inherently could not be restored by conventional interpolation.

Time-of-Flight (ToF) cameras are a novel and fast developing technology for acquiring 3-D surfaces. In recent years they have gathered interest from many fields including 3-D endoscopy. However, preprossessing of the obtained images is absolutely mandatory due to the low signal-to-noise ratio of current sensors. One possibility to increase image quality is the non-local-means (NLM) filter that utilizes local neighborhoods for denoising. In this paper we present an enhanced NLM filter for hybrid 3-D endoscopy. The introduced filter gathers the structural information from an RGB image that shows the same scene as the range image. To cope with camera movements, we incorporate a temporal component by considering a sequence of frames. Evaluated on simulated data, the algorithm showed an improvement in range accuracy of 70% when compared to the unfiltered image.

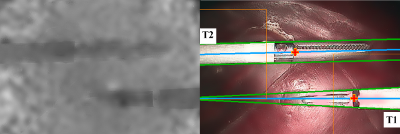

Minimally invasive procedures are of importance in modern surgery due to reduced operative trauma and recovery time. To enable robot assisted interventions, automatic tracking of endoscopic tools is an essential task. State-of-the-art techniques rely on 2-D color information only which is error prone for varying illumination and unpredictable color distribution within the human body. In this paper, we use a novel 3-D Time-of-Flight/RGB endoscope that allows to use both color and range information to locate laparoscopic instruments in 3-D. Regarding color and range information the proposed technique calculates a score to indicate which information is more reliable and adopts the next steps of the localization procedure based on this reliability. In experiments on real data the tool tip is located with an average 3-D distance error of less than 4 mm compared to manually labeled ground truth data with a frame-rate of 10 fps.

In the field of image-guided surgery, Time-of-Flight (ToF) sensors are of interest due to their fast acquisition of 3-D surfaces. However, the poor signal-to-noise ratio and low spatial resolution of today's ToF sensors require preprocessing of the acquired range data. Super-resolution is a technique for image restoration and resolution enhancement by utilizing information from successive raw frames of an image sequence. We propose a super-resolution framework using the graphics processing unit. Our framework enables interactive frame rates, computing an upsampled image from 10 noisy frames of 200x200 px with an upsampling factor of 2 in 109 ms. The root-mean-square error of the super-resolved surface with respect to ground truth data is improved by more than 20% relative to a single raw frame.

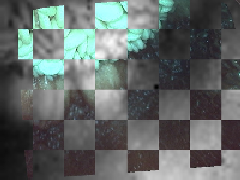

3-D Endoscopy is an evolving field of research and offers great benefits for minimally invasive procedures. Besides the pure topology, color texture is an inevitable feature to provide an optimal visualization. Therefore, in this paper, we propose a sensor fusion of a Time-of-Flight (ToF) and an RGB sensor. This requires an intrinsic and extrinsic calibration of both cameras. In particular, the low resolution of the ToF camera (64x50 px) and inhomogeneous illumination precludes the use of standard calibration techniques. By enhancing the image data the use of self-encoded markers for automatic checkerboard detection, a re-projection error of less than 0.23 px for the ToF camera was achieved. The relative transformation of both sensors for data fusion was calculated in an automatic manner.

Eine der größten Herausforderungen computergestützter Assistenzsysteme für laparoskopische Eingriffe ist die intraoperative akkurate und schnelle Rekonstruktion der Organoberfläche. Während Rekonstruktionstechniken basierend auf Multiple View Methoden, beispielsweise Stereo-Rekonstruktion, schon länger Gegenstand der Forschung sind, wurde erst kürzlich das weltweit erste Time-of-Flight (ToF) Endoskop vorgestellt. Die Vorteile gegenüber Stereo liegen in der hohen Aktualisierungsrate und dem dichten Tiefenbild unabhängig von der betrachteten Szene. Demgegenüber stehen allerdings Nachteile wie schlechte Genauigkeit bedingt durch hohes Rauschen und systematische Fehler. Um die Vorteile beider Verfahren zu vereinen, wird ein Konzept entwickelt, die ToF-Endoskopie-Technik mit einem stereoähnlichen Multiple-View-Ansatz (Struktur durch Bewegung) zu fusionieren. Der Ansatz benötigt keine zusätzliche Bildgebungsmodalität wie z.B. ein Stereoskop, sondern nutzt die ohnehin akquirierten (Mono-) Farbdaten des ToF-Endoskops. Erste Ergebnisse zeigen, dass die Genauigkeit der Oberflächenrekonstruktion mit diesem Ansatz verbessert werden kann.